1.8 KiB

1.8 KiB

Lidar to Camera Extrinsic Calibration

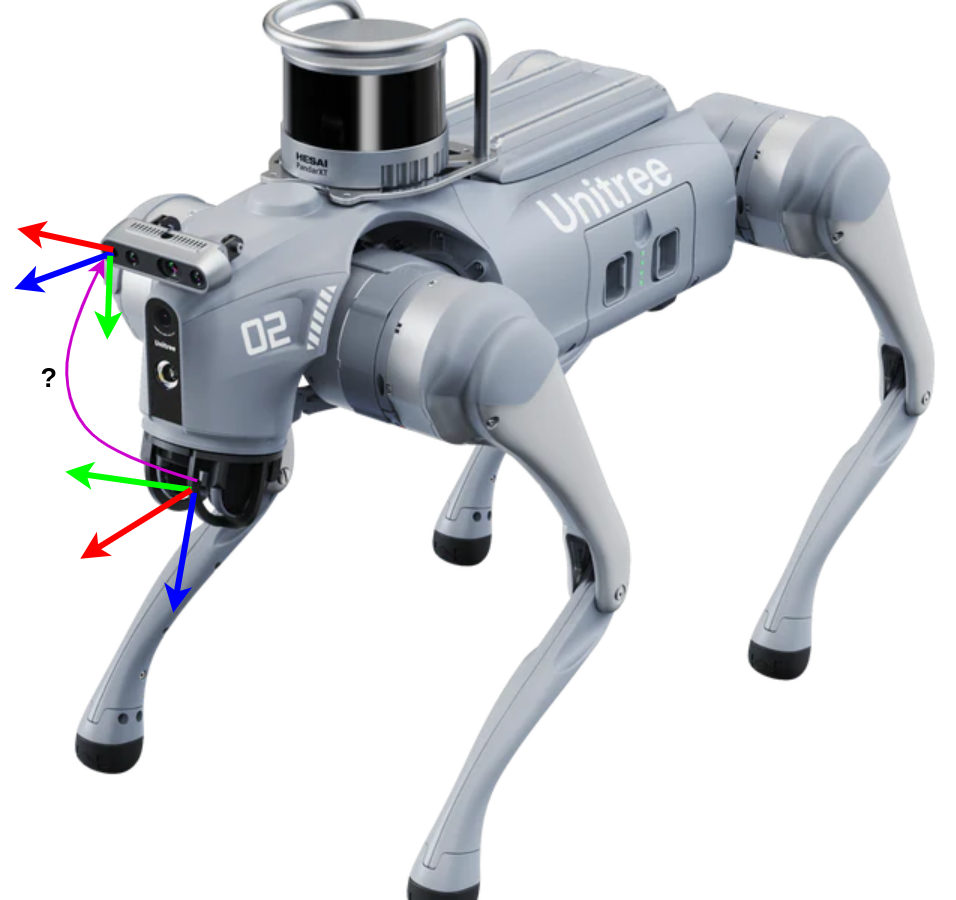

The goal of this procedure is to find the extrinsic transformation between the Lidar coordinate frame and that of the camera in front of the robot:

First pull the docker image:

docker pull koide3/direct_visual_lidar_calibration:humble

Then activate the X server connection port:

xhost +

and run the container with GUI support:

docker run \

-it \

--rm \

--net host \

--gpus all \

-e DISPLAY=$DISPLAY \

-v $HOME/.Xauthority:/root/.Xauthority \

-v /path/to/input/bags:/tmp/input_bags \

-v /path/to/save/result:/tmp/preprocessed \

koide3/direct_visual_lidar_calibration:humble

Inside the container generate images from the LiDAR data (Preprocessing):

ros2 run direct_visual_lidar_calibration preprocess /path/to/bag/dir /path/to/result/dir \

--image_topic /camera/color/image_raw \

--points_topic /utlidar/cloud \

--camera_model plumb_bob \

--camera_intrinsics 379.7099304199219,320.3064270019531,379.3695983886719,243.11753845214844 \

--camera_distortion_coeffs -0.057967256754636765,0.0704321563243866,-0.00015285948757082224,0.0006057045538909733,-0.022366832941770554

then manually select the correspondences between the 3D pointcloud and the image:

ros2 run direct_visual_lidar_calibration initial_guess_manual /path/to/result/dir

Finally perform a refinement step by running:

ros2 run direct_visual_lidar_calibration calibrate /path/to/result/dir

Source

This procedure has been taken from direct_visual_lidar_calibration toolbox.