|

|

||

|---|---|---|

| history | ||

| public | ||

| rules | ||

| scripts | ||

| src | ||

| supabase/migrations | ||

| .dockerignore | ||

| .env.example | ||

| .gitignore | ||

| Dockerfile | ||

| LICENSE | ||

| README.md | ||

| README_EN.md | ||

| components.json | ||

| docker-compose.yml | ||

| example.txt | ||

| index.html | ||

| nginx.conf | ||

| package.json | ||

| pnpm-lock.yaml | ||

| postcss.config.js | ||

| sgconfig.yml | ||

| tailwind.config.js | ||

| tsconfig.app.json | ||

| tsconfig.check.json | ||

| tsconfig.json | ||

| tsconfig.node.json | ||

| vite.config.ts | ||

README_EN.md

XCodeReviewer - Your Intelligent Code Audit Partner 🚀

XCodeReviewer is a modern code audit platform powered by Large Language Models (LLM), designed to provide developers with intelligent, comprehensive, and in-depth code quality analysis and review services.

🌟 Why Choose XCodeReviewer?

In the fast-paced world of software development, ensuring code quality is crucial. Traditional code audit tools are rigid and inefficient, while manual audits are time-consuming and labor-intensive. XCodeReviewer leverages the powerful capabilities of Google Gemini AI to revolutionize the way code reviews are conducted:

- 🤖 AI-Driven Deep Analysis: Beyond traditional static analysis, understands code intent and discovers deep logical issues.

- 🎯 Multi-dimensional, Comprehensive Assessment: From security, performance, maintainability to code style, providing 360-degree quality evaluation.

- 💡 Clear, Actionable Fix Suggestions: Innovative What-Why-How approach that not only tells you "what" the problem is, but also explains "why" and provides "how to fix" with specific code examples.

- ✅ Multi-Platform LLM/Local Model Support: Implemented API calling functionality for 10+ mainstream platforms (Gemini, OpenAI, Claude, Qwen, DeepSeek, Zhipu AI, Kimi, ERNIE, MiniMax, Doubao, Ollama Local Models), with support for free configuration and switching

- ✨ Modern, Beautiful User Interface: Built with React + TypeScript, providing a smooth and intuitive user experience.

🎬 Project Demo

Main Feature Interfaces

📊 Intelligent Dashboard

Real-time display of project statistics, quality trends, and system performance, providing comprehensive code audit overview

Real-time display of project statistics, quality trends, and system performance, providing comprehensive code audit overview

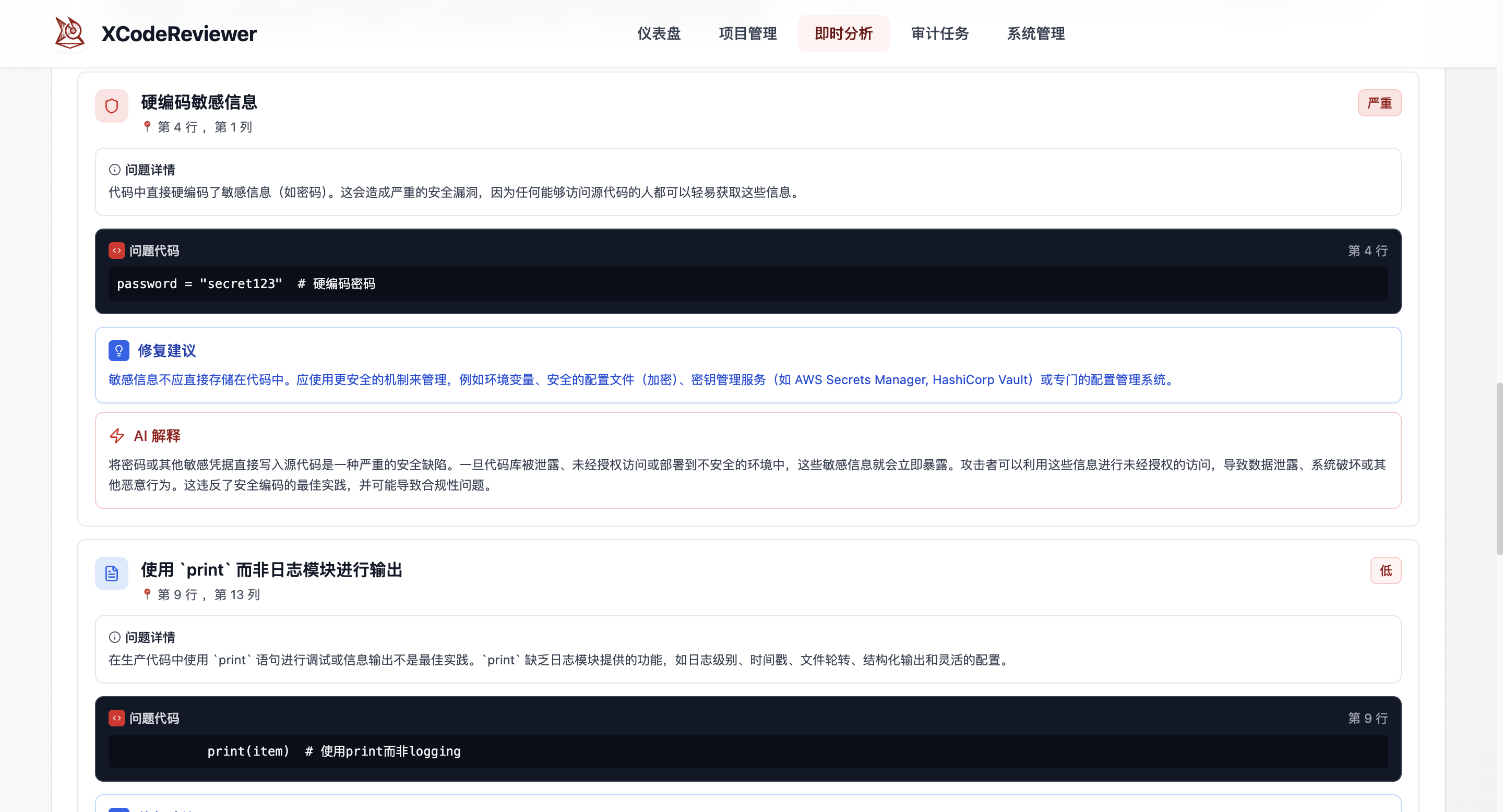

⚡ Instant Analysis

Support for quick code snippet analysis with detailed What-Why-How explanations and fix suggestions

Support for quick code snippet analysis with detailed What-Why-How explanations and fix suggestions

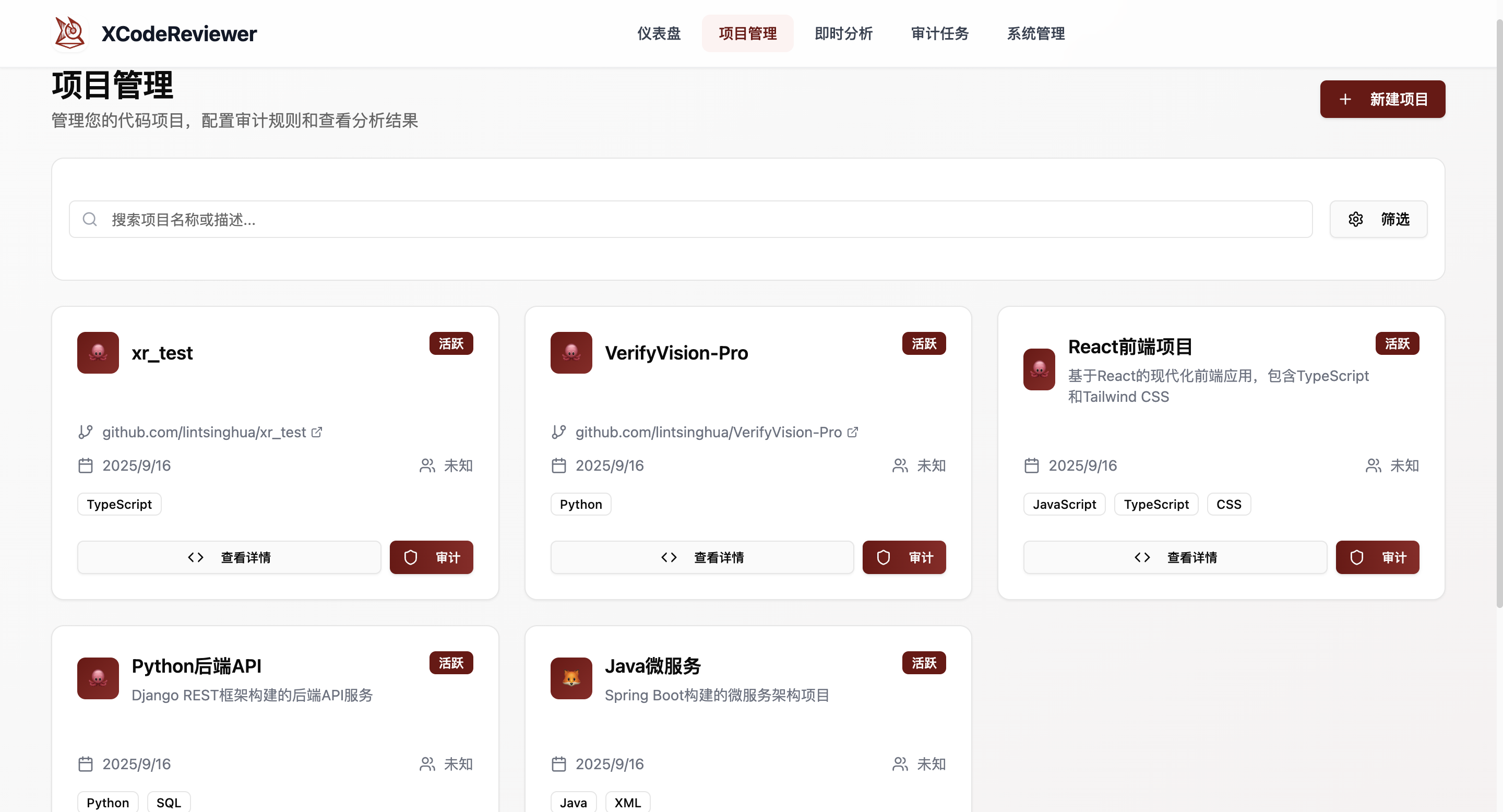

🚀 Project Management

Integrated GitHub/GitLab repositories, supporting multi-language project audits and batch code analysis

Integrated GitHub/GitLab repositories, supporting multi-language project audits and batch code analysis

🚀 Quick Start

🐳 Docker Deployment (Recommended)

Deploy quickly using Docker without Node.js environment setup.

-

Clone the project

git clone https://github.com/lintsinghua/XCodeReviewer.git cd XCodeReviewer -

Configure environment variables

cp .env.example .env # Edit .env file and configure LLM provider and API Key # Method 1: Using Universal Configuration (Recommended) # VITE_LLM_PROVIDER=gemini # VITE_LLM_API_KEY=your_api_key # # Method 2: Using Platform-Specific Configuration # VITE_GEMINI_API_KEY=your_gemini_api_key -

Build and start

docker-compose build docker-compose up -d -

Access the application

Open

http://localhost:5174in your browser

Common commands:

docker-compose logs -f # View logs

docker-compose restart # Restart service

docker-compose down # Stop service

💻 Local Development Deployment

For development or custom modifications, use local deployment.

Requirements

- Node.js:

18+ - pnpm:

8+(recommended) ornpm/yarn - Google Gemini API Key: For AI code analysis

Installation & Setup

-

Clone the project

git clone https://github.com/lintsinghua/XCodeReviewer.git cd XCodeReviewer -

Install dependencies

# Using pnpm (recommended) pnpm install # Or using npm npm install # Or using yarn yarn install -

Configure environment variables

# Copy environment template cp .env.example .envEdit the

.envfile and configure the necessary environment variables:# LLM Universal Configuration (Recommended) VITE_LLM_PROVIDER=gemini # Choose provider (gemini|openai|claude|qwen|deepseek, etc.) VITE_LLM_API_KEY=your_api_key_here # Corresponding API Key VITE_LLM_MODEL=gemini-2.5-flash # Model name (optional) # Or use platform-specific configuration VITE_GEMINI_API_KEY=your_gemini_api_key_here VITE_OPENAI_API_KEY=your_openai_api_key_here VITE_CLAUDE_API_KEY=your_claude_api_key_here # ... Supports 10+ mainstream platforms # Supabase Configuration (Optional, for data persistence) VITE_SUPABASE_URL=https://your-project.supabase.co VITE_SUPABASE_ANON_KEY=your-anon-key-here # GitHub Integration (Optional, for repository analysis) VITE_GITHUB_TOKEN=your_github_token_here # Application Configuration VITE_APP_ID=xcodereviewer # Analysis Configuration VITE_MAX_ANALYZE_FILES=40 VITE_LLM_CONCURRENCY=2 VITE_LLM_GAP_MS=500 -

Start development server

pnpm dev -

Access the application Open

http://localhost:5174in your browser

⚙️ Advanced Configuration (Optional)

If you encounter timeout or connection issues, adjust these settings:

# Increase timeout (default 150000ms)

VITE_LLM_TIMEOUT=150000

# Use custom API endpoint (for proxy or private deployment)

VITE_LLM_BASE_URL=https://your-proxy-url.com

# Reduce concurrency and increase request gap (to avoid rate limiting)

VITE_LLM_CONCURRENCY=1

VITE_LLM_GAP_MS=1000

🔧 FAQ

Q: How to quickly switch between LLM platforms?

Simply modify the VITE_LLM_PROVIDER value:

# Switch to OpenAI

VITE_LLM_PROVIDER=openai

VITE_OPENAI_API_KEY=your_openai_key

# Switch to Claude

VITE_LLM_PROVIDER=claude

VITE_CLAUDE_API_KEY=your_claude_key

# Switch to Qwen

VITE_LLM_PROVIDER=qwen

VITE_QWEN_API_KEY=your_qwen_key

Q: What to do when encountering "Request Timeout" error?

- Increase timeout: Set

VITE_LLM_TIMEOUT=300000in.env(5 minutes) - Check network connection: Ensure you can access the API endpoint

- Use proxy: Configure

VITE_LLM_BASE_URLif API is blocked - Switch platform: Try other LLM providers, such as DeepSeek (good for China)

Q: How to use Chinese platforms to avoid network issues?

Recommended Chinese platforms for faster access:

# Use Qwen (Recommended)

VITE_LLM_PROVIDER=qwen

VITE_QWEN_API_KEY=your_qwen_key

# Or use DeepSeek (Cost-effective)

VITE_LLM_PROVIDER=deepseek

VITE_DEEPSEEK_API_KEY=your_deepseek_key

# Or use Zhipu AI

VITE_LLM_PROVIDER=zhipu

VITE_ZHIPU_API_KEY=your_zhipu_key

Q: What's the API Key format for Baidu ERNIE?

Baidu API Key requires both API Key and Secret Key, separated by colon:

VITE_LLM_PROVIDER=baidu

VITE_BAIDU_API_KEY=your_api_key:your_secret_key

VITE_BAIDU_MODEL=ERNIE-3.5-8K

Get API Key and Secret Key from Baidu Qianfan Platform.

Q: How to configure proxy or relay service?

Use VITE_LLM_BASE_URL to configure custom endpoint:

# OpenAI relay example

VITE_LLM_PROVIDER=openai

VITE_OPENAI_API_KEY=your_key

VITE_OPENAI_BASE_URL=https://api.your-proxy.com/v1

# Or use universal config

VITE_LLM_PROVIDER=openai

VITE_LLM_API_KEY=your_key

VITE_LLM_BASE_URL=https://api.your-proxy.com/v1

Q: How to configure multiple platforms and switch quickly?

Configure all platform keys in .env, then switch by modifying VITE_LLM_PROVIDER:

# Currently active platform

VITE_LLM_PROVIDER=gemini

# Pre-configure all platforms

VITE_GEMINI_API_KEY=gemini_key

VITE_OPENAI_API_KEY=openai_key

VITE_CLAUDE_API_KEY=claude_key

VITE_QWEN_API_KEY=qwen_key

VITE_DEEPSEEK_API_KEY=deepseek_key

# Just modify the first line's provider value to switch

Q: How to use Ollama local models?

Ollama allows you to run open-source models locally without an API key, protecting data privacy:

1. Install Ollama

# macOS / Linux

curl -fsSL https://ollama.com/install.sh | sh

# Windows

# Download and install: https://ollama.com/download

2. Pull and run a model

# Pull Llama3 model

ollama pull llama3

# Verify the model is available

ollama list

3. Configure XCodeReviewer

VITE_LLM_PROVIDER=ollama

VITE_LLM_API_KEY=ollama # Can be any value

VITE_LLM_MODEL=llama3 # Model name to use

VITE_LLM_BASE_URL=http://localhost:11434/v1 # Ollama API address

Recommended Models:

llama3- Meta's open-source model with excellent performancecodellama- Code-optimized modelqwen2.5- Open-source version of Alibaba Qwendeepseek-coder- DeepSeek's code-specialized model

More models available at: https://ollama.com/library

🔑 Getting API Keys

🎯 Supported LLM Platforms

XCodeReviewer now supports multiple mainstream LLM platforms. You can choose freely based on your needs:

International Platforms:

- Google Gemini - Recommended for code analysis, generous free tier Get API Key

- OpenAI GPT - Stable and reliable, best overall performance Get API Key

- Anthropic Claude - Strong code understanding capabilities Get API Key

- DeepSeek - Cost-effective Get API Key

Chinese Platforms:

- Alibaba Qwen (通义千问) Get API Key

- Zhipu AI (GLM) Get API Key

- Moonshot (Kimi) Get API Key

- Baidu ERNIE (文心一言) Get API Key

- MiniMax Get API Key

- Bytedance Doubao (豆包) Get API Key

Local Deployment:

- Ollama - Run open-source models locally, supports Llama3, Mistral, CodeLlama, etc. Installation Guide

📝 Configuration Examples

Configure your chosen platform in the .env file:

# Method 1: Using Universal Configuration (Recommended)

VITE_LLM_PROVIDER=gemini # Choose provider

VITE_LLM_API_KEY=your_api_key # Corresponding API Key

VITE_LLM_MODEL=gemini-2.5-flash # Model name (optional)

# Method 2: Using Platform-Specific Configuration

VITE_GEMINI_API_KEY=your_gemini_api_key

VITE_OPENAI_API_KEY=your_openai_api_key

VITE_CLAUDE_API_KEY=your_claude_api_key

# ... Other platform configurations

# Using Ollama Local Models (No API Key Required)

VITE_LLM_PROVIDER=ollama

VITE_LLM_API_KEY=ollama # Can be any value

VITE_LLM_MODEL=llama3 # Model name to use

VITE_LLM_BASE_URL=http://localhost:11434/v1 # Ollama API address (optional)

Quick Platform Switch: Simply modify the value of VITE_LLM_PROVIDER to switch between different platforms!

💡 Tip: For detailed configuration instructions, please refer to the

.env.examplefile

Supabase Configuration (Optional)

- Visit Supabase to create a new project

- Get the URL and anonymous key from project settings

- Run database migration scripts:

# Execute in Supabase SQL Editor cat supabase/migrations/full_schema.sql - If Supabase is not configured, the system will run in demo mode without data persistence

✨ Core Features

🚀 Project Management

- One-click Repository Integration: Seamlessly connect with GitHub, GitLab, and other mainstream platforms.

- Multi-language "Full Stack" Support: Covers popular languages like JavaScript, TypeScript, Python, Java, Go, Rust, and more.

- Flexible Branch Auditing: Support for precise analysis of specified code branches.

⚡ Instant Analysis

- Code Snippet "Quick Paste": Directly paste code in the web interface for immediate analysis results.

- 10+ Language Instant Support: Meet your diverse code analysis needs.

- Millisecond Response: Quickly get code quality scores and optimization suggestions.

🧠 Intelligent Auditing

- AI Deep Code Understanding: Supports multiple mainstream LLM platforms (Gemini, OpenAI, Claude, Qwen, DeepSeek, etc.), providing intelligent analysis beyond keyword matching.

- Five Core Detection Dimensions:

- 🐛 Potential Bugs: Precisely capture logical errors, boundary conditions, and null pointer issues.

- 🔒 Security Vulnerabilities: Identify SQL injection, XSS, sensitive information leakage, and other security risks.

- ⚡ Performance Bottlenecks: Discover inefficient algorithms, memory leaks, and unreasonable asynchronous operations.

- 🎨 Code Style: Ensure code follows industry best practices and unified standards.

- 🔧 Maintainability: Evaluate code readability, complexity, and modularity.

💡 Explainable Analysis (What-Why-How)

- What: Clearly identify problems in the code.

- Why: Detailed explanation of potential risks and impacts the problem may cause.

- How: Provide specific, directly usable code fix examples.

- Precise Code Location: Quickly jump to the problematic line and column.

📊 Visual Reports

- Code Quality Dashboard: Provides comprehensive quality assessment from 0-100, making code health status clear at a glance.

- Multi-dimensional Issue Statistics: Classify and count issues by type and severity.

- Quality Trend Analysis: Display code quality changes over time through charts.

🛠️ Tech Stack

| Category | Technology | Description |

|---|---|---|

| Frontend Framework | React 18 TypeScript Vite |

Modern frontend development stack with hot reload and type safety |

| UI Components | Tailwind CSS Radix UI Lucide React |

Responsive design, accessibility, rich icon library |

| Data Visualization | Recharts |

Professional chart library supporting multiple chart types |

| Routing | React Router v6 |

Single-page application routing solution |

| State Management | React Hooks Sonner |

Lightweight state management and notification system |

| AI Engine | Multi-Platform LLM |

Supports 10+ mainstream platforms including Gemini, OpenAI, Claude, Qwen, DeepSeek |

| Backend Service | Supabase PostgreSQL |

Full-stack backend-as-a-service with real-time database |

| HTTP Client | Axios Ky |

Modern HTTP request libraries |

| Code Quality | Biome Ast-grep TypeScript |

Code formatting, static analysis, and type checking |

| Build Tools | Vite PostCSS Autoprefixer |

Fast build tools and CSS processing |

📁 Project Structure

XCodeReviewer/

├── src/

│ ├── app/ # Application configuration

│ │ ├── App.tsx # Main application component

│ │ ├── main.tsx # Application entry point

│ │ └── routes.tsx # Route configuration

│ ├── components/ # React components

│ │ ├── layout/ # Layout components (Header, Footer, PageMeta)

│ │ ├── ui/ # UI component library (based on Radix UI)

│ │ └── debug/ # Debug components

│ ├── pages/ # Page components

│ │ ├── Dashboard.tsx # Dashboard

│ │ ├── Projects.tsx # Project management

│ │ ├── InstantAnalysis.tsx # Instant analysis

│ │ ├── AuditTasks.tsx # Audit tasks

│ │ └── AdminDashboard.tsx # System management

│ ├── features/ # Feature modules

│ │ ├── analysis/ # Analysis related services

│ │ │ └── services/ # AI code analysis engine

│ │ └── projects/ # Project related services

│ │ └── services/ # Repository scanning, ZIP file scanning

│ ├── shared/ # Shared utilities

│ │ ├── config/ # Configuration files (database, environment)

│ │ ├── types/ # TypeScript type definitions

│ │ ├── hooks/ # Custom React Hooks

│ │ ├── utils/ # Utility functions

│ │ └── constants/ # Constants definition

│ └── assets/ # Static assets

│ └── styles/ # Style files

├── supabase/

│ └── migrations/ # Database migration files

├── public/

│ └── images/ # Image resources

├── scripts/ # Build and setup scripts

└── rules/ # Code rules configuration

🎯 Usage Guide

Instant Code Analysis

- Visit the

/instant-analysispage - Select programming language (supports 10+ languages)

- Paste code or upload file

- Click "Start Analysis" to get AI analysis results

- View detailed issue reports and fix suggestions

Project Management

- Visit the

/projectspage - Click "New Project" to create a project

- Configure repository URL and scan parameters

- Start code audit task

- View audit results and issue statistics

Audit Tasks

- Create audit tasks in project detail page

- Select scan branch and exclusion patterns

- Configure analysis depth and scope

- Monitor task execution status

- View detailed issue reports

Build and Deploy

# Development mode

pnpm dev

# Build production version

pnpm build

# Preview build results

pnpm preview

# Code linting

pnpm lint

Environment Variables

Core LLM Configuration

| Variable | Required | Default | Description |

|---|---|---|---|

VITE_LLM_PROVIDER |

✅ | gemini |

LLM provider: gemini|openai|claude|qwen|deepseek|zhipu|moonshot|baidu|minimax|doubao |

VITE_LLM_API_KEY |

✅ | - | Universal API Key (higher priority than platform-specific config) |

VITE_LLM_MODEL |

❌ | Auto | Model name (uses platform default if not specified) |

VITE_LLM_BASE_URL |

❌ | - | Custom API endpoint (for proxy, relay, or private deployment) |

VITE_LLM_TIMEOUT |

❌ | 150000 |

Request timeout (milliseconds) |

VITE_LLM_TEMPERATURE |

❌ | 0.2 |

Temperature parameter (0.0-2.0), controls output randomness |

VITE_LLM_MAX_TOKENS |

❌ | 4096 |

Maximum output tokens |

Platform-Specific API Key Configuration (Optional)

| Variable | Description | Special Requirements |

|---|---|---|

VITE_GEMINI_API_KEY |

Google Gemini API Key | - |

VITE_GEMINI_MODEL |

Gemini model (default: gemini-2.5-flash) | - |

VITE_OPENAI_API_KEY |

OpenAI API Key | - |

VITE_OPENAI_MODEL |

OpenAI model (default: gpt-4o-mini) | - |

VITE_OPENAI_BASE_URL |

OpenAI custom endpoint | For relay services |

VITE_CLAUDE_API_KEY |

Anthropic Claude API Key | - |

VITE_CLAUDE_MODEL |

Claude model (default: claude-3-5-sonnet-20241022) | - |

VITE_QWEN_API_KEY |

Alibaba Qwen API Key | - |

VITE_QWEN_MODEL |

Qwen model (default: qwen-turbo) | - |

VITE_DEEPSEEK_API_KEY |

DeepSeek API Key | - |

VITE_DEEPSEEK_MODEL |

DeepSeek model (default: deepseek-chat) | - |

VITE_ZHIPU_API_KEY |

Zhipu AI API Key | - |

VITE_ZHIPU_MODEL |

Zhipu model (default: glm-4-flash) | - |

VITE_MOONSHOT_API_KEY |

Moonshot Kimi API Key | - |

VITE_MOONSHOT_MODEL |

Kimi model (default: moonshot-v1-8k) | - |

VITE_BAIDU_API_KEY |

Baidu ERNIE API Key | ⚠️ Format: API_KEY:SECRET_KEY |

VITE_BAIDU_MODEL |

ERNIE model (default: ERNIE-3.5-8K) | - |

VITE_MINIMAX_API_KEY |

MiniMax API Key | - |

VITE_MINIMAX_MODEL |

MiniMax model (default: abab6.5-chat) | - |

VITE_DOUBAO_API_KEY |

Bytedance Doubao API Key | - |

VITE_DOUBAO_MODEL |

Doubao model (default: doubao-pro-32k) | - |

Database Configuration (Optional)

| Variable | Required | Description |

|---|---|---|

VITE_SUPABASE_URL |

❌ | Supabase project URL (for data persistence) |

VITE_SUPABASE_ANON_KEY |

❌ | Supabase anonymous key |

💡 Note: Without Supabase config, system runs in demo mode without data persistence

GitHub Integration Configuration (Optional)

| Variable | Required | Description |

|---|---|---|

VITE_GITHUB_TOKEN |

❌ | GitHub Personal Access Token (for repository analysis) |

Analysis Behavior Configuration

| Variable | Default | Description |

|---|---|---|

VITE_MAX_ANALYZE_FILES |

40 |

Maximum files per analysis |

VITE_LLM_CONCURRENCY |

2 |

LLM concurrent requests (reduce to avoid rate limiting) |

VITE_LLM_GAP_MS |

500 |

Gap between LLM requests (milliseconds, increase to avoid rate limiting) |

Application Configuration

| Variable | Default | Description |

|---|---|---|

VITE_APP_ID |

xcodereviewer |

Application identifier |

🤝 Contributing

We warmly welcome all forms of contributions! Whether it's submitting issues, creating PRs, or improving documentation, every contribution is important to us. Please contact us for detailed information.

Development Workflow

- Fork this project

- Create your feature branch (

git checkout -b feature/AmazingFeature) - Commit your changes (

git commit -m 'Add some AmazingFeature') - Push to the branch (

git push origin feature/AmazingFeature) - Create a Pull Request

🙏 Acknowledgments

- Google Gemini AI: Providing powerful AI analysis capabilities

- Supabase: Providing convenient backend-as-a-service support

- Radix UI: Providing accessible UI components

- Tailwind CSS: Providing modern CSS framework

- Recharts: Providing professional chart components

- And all the authors of open source software used in this project!

📞 Contact Us

- Project Link: https://github.com/lintsinghua/XCodeReviewer

- Issue Reports: Issues

- Author Email: tsinghuaiiilove@gmail.com

🎯 Future Plans

Currently, XCodeReviewer is positioned in the rapid prototype verification stage, and its functions need to be gradually improved. Based on the subsequent development of the project and everyone's suggestions, the future development plan is as follows (to be implemented as soon as possible):

- ✅ Multi-Platform LLM Support: Implemented API calling functionality for 10+ mainstream platforms (Gemini, OpenAI, Claude, Qwen, DeepSeek, Zhipu AI, Kimi, ERNIE, MiniMax, Doubao, Ollama Local Models), with support for free configuration and switching

- ✅ Local Model Support: Added support for Ollama local large models to meet data privacy requirements

- Multi-Agent Collaboration: Consider introducing a multi-agent collaboration architecture, which will implement the

Agent + Human Dialoguefeedback function, including multi-round dialogue process display, human dialogue interruption intervention, etc., to obtain a clearer, more transparent, and supervised auditing process, thereby improving audit quality. - Professional Report File Generation: Generate professional audit report files in relevant formats according to different needs, supporting customization of file report formats, etc.

- Custom Audit Standards: Different teams have their own coding standards, and different projects have specific security requirements, which is exactly what we want to do next in this project. The current version is still in a "semi-black box mode", where the project guides the analysis direction and defines audit standards through Prompt engineering, and the actual analysis effect is determined by the built-in knowledge of powerful pre-trained AI models. In the future, we will combine methods such as reinforcement learning and supervised learning fine-tuning to develop support for custom rule configuration, define team-specific rules through YAML or JSON, provide best practice templates for common frameworks, etc., to obtain audit results that are more in line with requirements and standards.

⭐ If this project helps you, please give us a Star! Your support is our motivation to keep moving forward!

📄 Disclaimer

This disclaimer is intended to clarify the responsibilities and risks associated with the use of this open source project and to protect the legitimate rights and interests of project authors, contributors and maintainers. The code, tools and related content provided by this open source project are for reference and learning purposes only.

1. Code Privacy and Security Warning

- ⚠️ Important Notice: This tool analyzes code by calling third-party LLM service provider APIs, which means your code will be sent to the servers of the selected LLM service provider.

- It is strictly prohibited to upload the following types of code:

- Code containing trade secrets, proprietary algorithms, or core business logic

- Code involving state secrets, national defense security, or other classified information

- Code containing sensitive data (such as user data, keys, passwords, tokens, etc.)

- Code restricted by laws and regulations from being transmitted externally

- Proprietary code of clients or third parties (without authorization)

- Users must independently assess the sensitivity of their code and bear full responsibility for uploading code and any resulting information disclosure.

- Recommendation: For sensitive code, please wait for future local model deployment support in this project, or use privately deployed LLM services.

- Project authors, contributors, and maintainers assume no responsibility for any information disclosure, intellectual property infringement, legal disputes, or other losses resulting from users uploading sensitive code.

2. Non-Professional Advice

- The code analysis results and suggestions provided by this tool are for reference only and do not constitute professional security audits, code reviews, or legal advice.

- Users must combine manual reviews, professional tools, and other reliable resources to thoroughly validate critical code (especially in high-risk areas such as security, finance, or healthcare).

3. No Warranty and Liability Disclaimer

- This project is provided "as is" without any express or implied warranties, including but not limited to merchantability, fitness for a particular purpose, and non-infringement.

- Authors, contributors, and maintainers shall not be liable for any direct, indirect, incidental, special, punitive, or consequential damages, including but not limited to data loss, system failures, security breaches, or business losses, even if advised of the possibility.

4. Limitations of AI Analysis

- This tool relies on AI models such as Google Gemini, and results may contain errors, omissions, or inaccuracies, with no guarantee of completeness or reliability.

- AI outputs cannot replace human expert judgment; users are solely responsible for the final code quality and any outcomes.

5. Third-Party Services and Data Privacy

- This project integrates multiple third-party LLM services including Google Gemini, OpenAI, Claude, Qwen, DeepSeek, as well as Supabase, GitHub, and other services. Usage is subject to their respective terms of service and privacy policies.

- Code Transmission Notice: User-submitted code will be sent via API to the selected LLM service provider for analysis. The transmission process and data processing follow each service provider's privacy policy.

- Users must obtain and manage API keys independently; this project does not store, transmit, or process user API keys and sensitive information.

- Availability, accuracy, privacy protection, data retention policies, or disruptions of third-party services are the responsibility of the providers; project authors assume no joint liability.

- Data Retention Warning: Different LLM service providers have varying policies on API request data retention and usage. Users should carefully read the privacy policy and terms of use of their chosen service provider before use.

6. User Responsibilities

- Users must ensure their code does not infringe third-party intellectual property rights, does not contain confidential information, and complies with open-source licenses and applicable laws.

- Users bear full responsibility for the content, nature, and compliance of uploaded code, including but not limited to:

- Ensuring code does not contain sensitive information or trade secrets

- Ensuring they have the right to use and analyze the code

- Complying with data protection and privacy laws in their country/region

- Adhering to confidentiality agreements and security policies of their company or organization

- This tool must not be used for illegal, malicious, or rights-infringing purposes; users bear full legal and financial responsibility for all consequences. Authors, contributors, and maintainers shall bear no responsibility for such activities or their consequences and reserve the right to pursue abusers.

7. Open Source Contributions

- Code, content, or suggestions from contributors do not represent the project's official stance; contributors are responsible for their accuracy, security, and compliance.

- Maintainers reserve the right to review, modify, reject, or remove any contributions.

For questions, please contact maintainers via GitHub Issues. This disclaimer is governed by the laws of the project's jurisdiction.